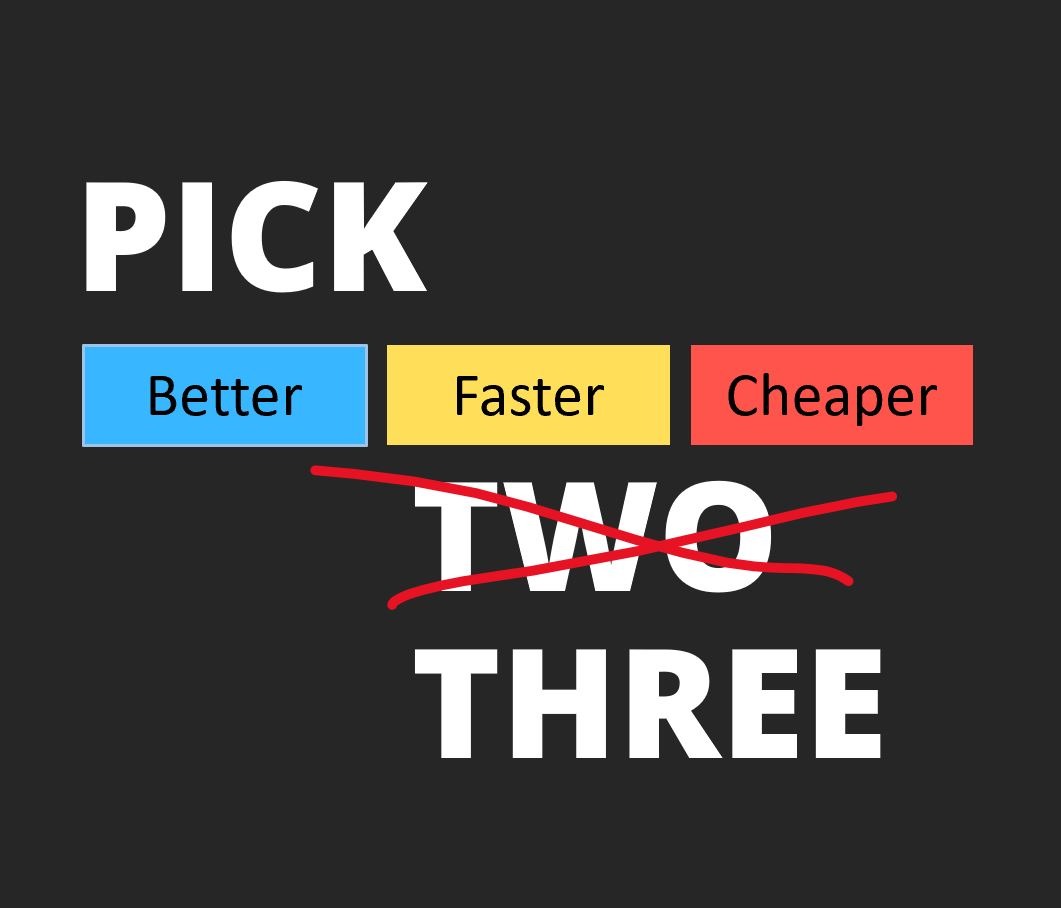

You may have heard the saying “Better, cheaper, faster – pick two”. The idea isn’t new. If you want something really good and you want it quickly, you’re going to have to pay. If you want to save money and still keep the quality, you’ll need to wait. And so on.

But in big data that mantra is being subverted. Thanks to the cloud, you can now deliver data solutions that are more flexible, scalable and, crucially, cheaper and faster. Best of all it doesn’t mean abandoning quality or reliability – if well designed you can achieve better quality and consistency.

Not so long ago, if you were planning a data project you might have to set aside a big chunk of your budget for licences, servers and a data warehouse in which to store it. On top of this, you’d need a specialised (and potentially expensive) team of people to set up the infrastructure and operate the hardware. This effectively put data analysis out of the reach of many smaller businesses.

The cloud has changed all that – revolutionising the delivery of data analytics. So, what exactly can it offer you?

BETTER

Today’s cloud based technology is so simple even the least tech savvy people are able to reap the rewards. You no longer need to know how much storage you require up front as companies like Snowflake simply offer small, medium or large solutions plus the option of almost infinite scalability. For many new and smaller businesses the entry package will be enough, allowing you to upload millions of rows of data. And as you expand you can simply scale up.

Conventional wisdom once said that there was no more secure way of storing data than keeping it all on your premises where it was maintained by and managed by a member of staff. In 2019 that is no longer true. Even the most conscientious IT person will be constrained by your budget and facilities. By handing this responsibility over to the likes of Microsoft with their near infinite resources, there is arguably no safer way of storing your valuable data.

CHEAPER

The maths is simple: with modern data platforms like Snowflake, you just pay for what you use. Whereas previously you would have had to try and work out up front how much space you needed and hope you hadn’t overestimated or underestimated (with the associated painful time and cost implications), now you can simply scale up or down as necessary as and when your business requires. If for example your business acquires a new company, it’s easy, simply instantly increase the size of your data warehouse. At the time of writing, a terabyte of storage with Snowflake is an astonishing $23 per month.

This flexibility also means reduced waste. Once you had to pay for a solution that might only be used on one day every month when you had to run 10,000 reports. The other 30 days it sat idle costing you money. Now you can pay for the smallest package for the majority of the month and set it to automatically scale up when you really need the resources.

FASTER

Remember the sound of whirring fans and the wall of heat that would hit you when you went anywhere near the server room? Thanks to the cloud you can do away with the racks upon racks of energy guzzling storage and move it all off site, possibly thousands of miles away. This doesn’t make it slower; thanks to modern petabyte networks, you can access your data in a fraction of the time, generating reports in 10 seconds rather than 20 minutes.

Several years ago Snap Analytics was hired by a large automotive manufacturer for a major project based on their premises. At the time cloud storage didn’t have quite the same functionality and wasn’t trusted to do the job. As a result we had to work on site with their people, working within their existing systems just to set up the architecture. It added nearly 6 months to the project – and quite a few zeros to the final invoice. Thankfully, with modern data platforms, these overheads are completely eliminated, the scalability is infinite and the speed is truly phenomenal. And all delivered at a fraction of the price!