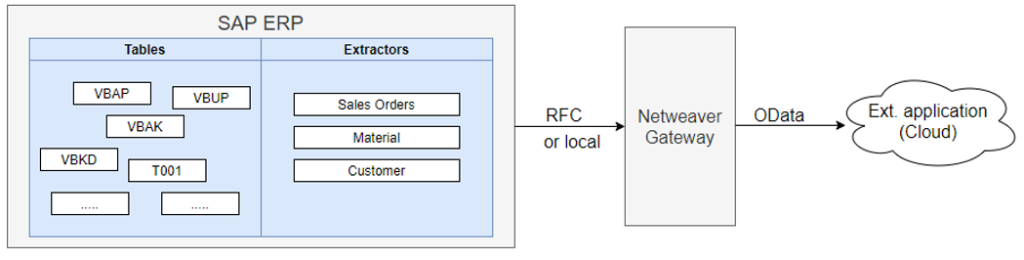

You can now use standard SAP extractors to get data from SAP into any data warehouse, the same way as SAP data is extracted for SAP Business Warehouse (SAP BW). In his previous blog, Jan van Ansem explained the background and relevance of this development. Now, George Whitby and Jan show how easy it is to set up the extraction process and load SAP data into Snowflake, using Matillion.

SAP – Prerequisites

The extractors on your SAP systems need to be ODP enabled. SAP Gateway needs to support SAP OData version 2.0. There should be no issues with this on SAP Netweaver 7.5. On SAP Netweaver 7.4 you probably need to implement some notes and anything older than 7.4 will be a struggle or impossible. The initial configuration consists of two steps: Setting up the Netweaver Gateway project and OData service configuration in transaction SEGW and registering the ODATA service in transaction /IWFND/MAINT_SERVICE. These configuration steps are described in detail in two excellent blogs so I will give you the links instead of repeating those:

https://blogs.sap.com/2020/11/02/exposing-sap-bw-extractors-via-odp-as-an-odata-service/https://blogs.sap.com/2020/05/29/distributing-data-from-bw-system-using-odp-based-data-extraction-and-odata/

Using delta extracts, filters and other options when calling the OData service

Assuming you managed to do all the set-up with some help of the above blogs, you should now be able to do a simple test of your OData extractor in a web browser. The URL would be something like:

https://<server>:<port>/sap/opu/odata/sap/<servicename>/$metadata

or, for the example I will use throughout this blog:

https://<server>:<port>/sap/opu/odata/sap/ZODATA_ODP_DS_EXTRACTORS_SRV_01/EntityOf0CUSTOMER_ATTR

Now this is cool and might be a point solution for an ad-hoc request, but this is of course not sufficient to do enterprise-wide data provisioning out of S/4HANA or SAP ECC. For that, you will need to be able to run deltas instead of full loads, create ad-hoc extracts for individual records

or ranges and go back into the load history to understand what data has and has not been passed on to other applications. Luckily, these are all standard aspects of ODP and available through the OData interface.

The specific OData service components we are interested in in the context of the ODP extractors are:

- Entity Sets: This is the ‘data model’ of the service. In the example above you saw ‘EntityOf0CUSTOMER_ATTR so it will be no surprise there can be similar entity sets for 0MATERIAL_ATTR, 0MATERIAL_TEXT, 0COMP_CODE_ATTR and so on. The ‘EntityOf’ dataset delivers the actual data (material, customer and so on).

In addition to ‘EntityOf’ entity set types, the OData service also provides the entity sets ‘DeltaLinksOfEntityOf’. Again, you can have these for 0CUSTOMER_ATTR, MATERIAL_TEXT or any other datasource. This dataset contains the (delta) load history for each specific dataset. The most important part of this is the timestamp token of the last extract – as this is what you need to provide to get the next delta. An example is included further down in this blog post. -

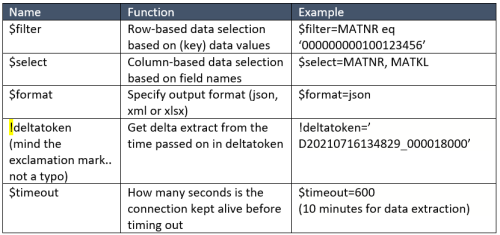

Parameters: These are optional conditions for the OData connector, and are appended to the URL, separated by the question mark symbol. The table below shows some examples.

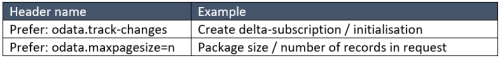

- Headers: These are further instructions about how the OData request should be processed. These parameters are not passed on in the URL as the parameters above but are contained within the OData request. Below are the most significant header key/value pairs for the use of ODP extractors in the OData interface:

In the next section I will show how to use the various entity sets, parameters and headers to get a delta initialisation working followed by delta load, followed by an example of a selective load. In the examples I will be using Matillion to load data to Snowflake, but you can test the same in SAP GUI (Transaction /IWFND/MAINT_SERVICE) or an easy-to-use API Development environment like postman.com.

Example 1: Delta initialisation

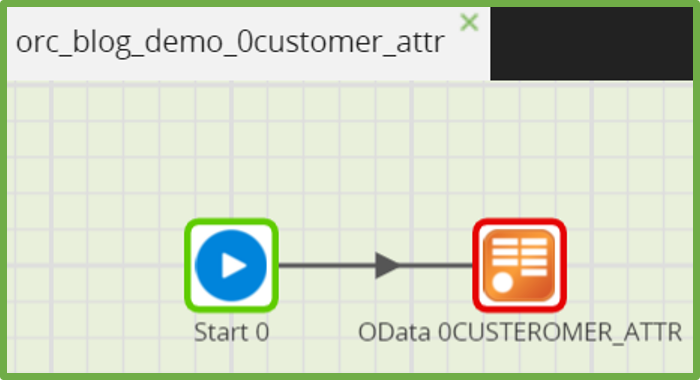

Delta queues get initialised by passing on the header ‘odata.track-changes’. In Matillion, create a simple orchestration job with a single component, ‘OData Query’.

Fill out the username and password, then provide the URL for the OData Service. Make sure you put the name of the service there, not the specific entity. In this example it is:

https://<server>:<port> /sap/opu/odata/sap/ZODATA_ODP_DS_EXTRACTORS_SRV_01

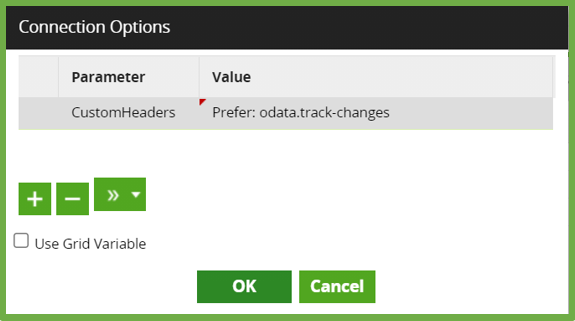

The header is passed on in ‘Connection Parameters’. Add parameter ‘CustomHeaders’ with value ‘Prefer: odata.track-changes’:

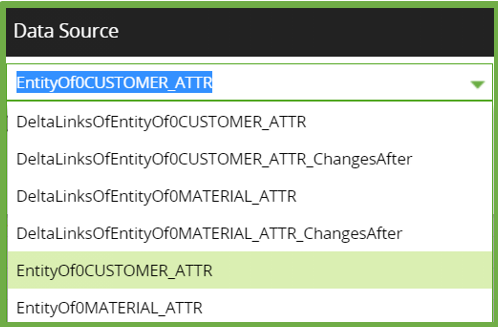

The ‘data source’ list should now show the different entities which can be delivered through the OData service. Choose ‘EntityOf/CUSTOMER_ATTR’

In ‘Data Selection’ you can select the columns to be transferred. The SAP OData service will pass on all columns – regardless of what you select here. If you leave columns out, it just means they are not created in the target database. Best practise is to keep all columns in the data acquisition layer of the data warehouse, and only leave columns out at higher layers.

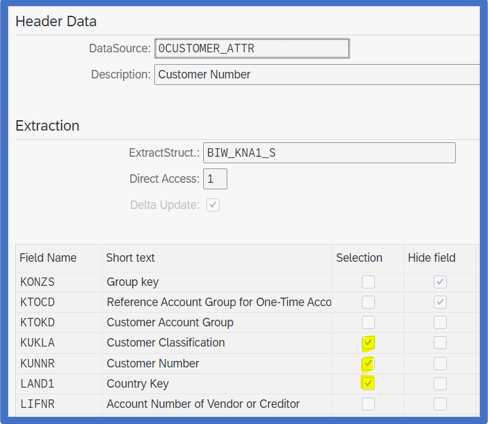

In the Data Source filter setting you can select rows on specific conditions. The interface does let you select on all fields but SAP can only process selections based on fields which are defined in the datasource definition as ‘Selection’ fields (Check in TC RSA6). You will get error messages if you select on other fields.

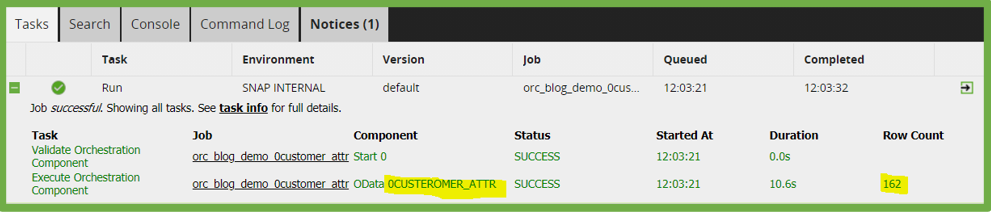

That’s it! Just configure the target database/schema/table names and run the job and you should get a full delta initialization with data:

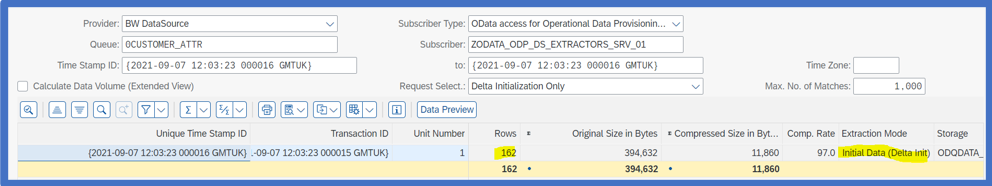

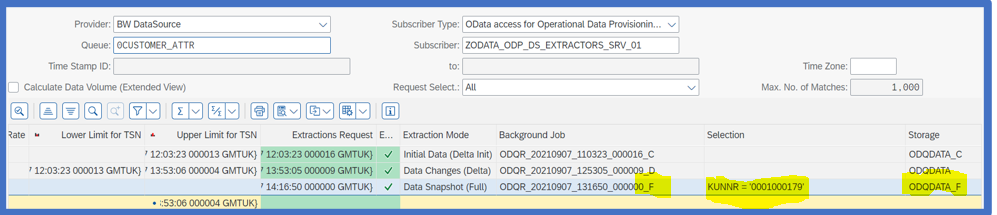

In S/4HANA TC ODQMON you can also see the request is successfully processed:

Example 2: Getting the next delta

To request the next delta you need to provide a ‘delta token’ in your API request. For that reason, getting delta records is a two-step process:

Step 1: Requesting the latest ‘delta token’ for our ODP Provider

Step 2: Request all new/changed records since the timestamp in the delta token.

You can easily build a more sophisticated shell around this process, by capturing some metadata as well as the delta token at the end of the init (or subsequent delta) run. The principle remains the same though so here are the basics:

Step 1: Request delta token

In Matillion, create a new job, use the OData Query component and configure with the same parameters and settings as in example 1, except:

- For Data Source, you now specify the ‘DeltaLinksOfEntityOf0CUSTOMER_ATTR’.

- Remove parameter ‘CustomHeaders’

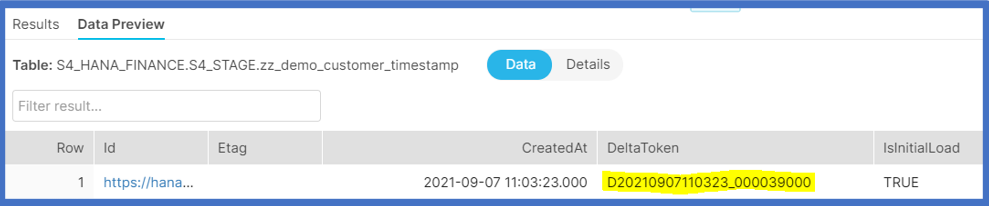

You can map the outcome to a metadata table. In this example I just use zz_demo_customer_timestamp. After running the job in Matillion, the timestamp is in Snowflake:

Step 2: Use the DeltaToken to get the next delta

Let me start by stating the obvious: Make sure you have some new or changed records in your data otherwise your next delta will come through with zero records.

For getting the delta you use the Data Source ‘EntityOfCustomer_Attr’ again (as in example 1).

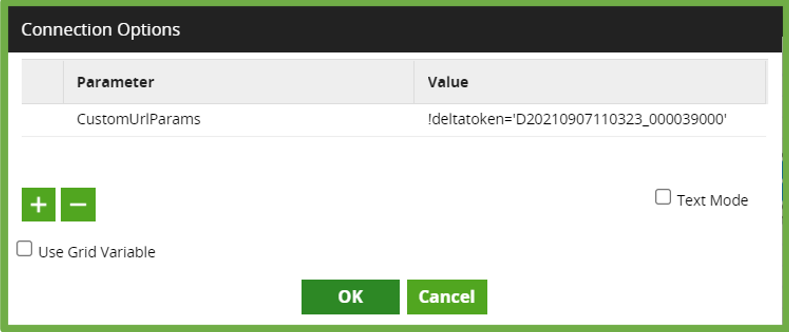

The parameter to pass through is set again in the Connection Options with the following settings:

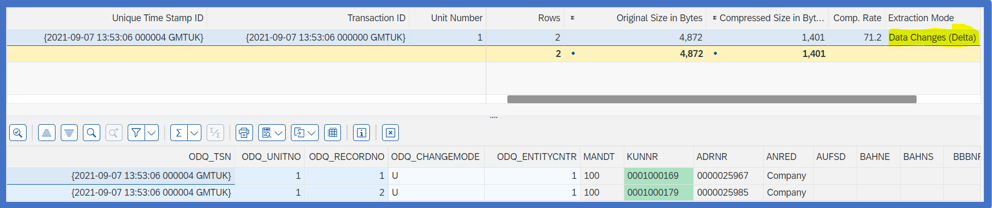

After running the job, the delta records (since the initialisation or last delta) will come through.

Of course it is no good having a hard-coded DeltaToken in

the definition, so an option would be to use a variable, which gets populated

by a query on the metadata table using the Matillion event ‘Query result to

Scalar’.

Example 3: Selective full load

This is very simple – you run it without any Connection options. Instead choose ‘Data Source Filter’ and pick the field you want to filter on. Make sure the datasource field is enabled for selection in RSA6:

Although Matillion lets you choose any field, the job will only work if you make your selection on a field enabled for selection.

Once the filter is set, just run and the selected records will come through. Again, ODQMON will show the request, with the selection criteria:

Next steps

You now understand the basics of the OData interface for SAP BW extractors and how you can use this in an ETL tool like Matillion to get data out of SAP ERP into a cloud data platform. This gives you a quick way of getting data out of SAP without having to work out all the complex relationships in the SAP ERP system to make data meaningful to the business. I hope you will all benefit from this easy way of unlocking SAP ERP with OData and your cloud data platform.

Last but not least – I would like to thank our amazing colleagues from Pivot Consulting for sharing their SAP S/4HANA system with us and providing excellent guidance on the S/4HANA system configuration. Here is to great partnerships!

REFERENCES

The following blog posts have been very helpful in putting the different pieces of the puzzle together:

https://blogs.sap.com/2020/11/02/exposing-sap-bw-extractors-via-odp-as-an-odata-service/https://blogs.sap.com/2020/11/04/consuming-odata-service-based-on-odp-extractor-in-python/https://blogs.sap.com/2020/05/29/distributing-data-from-bw-system-using-odp-based-data-extraction-and-odata/

GitHub

– Roman Broich: SAP Operational Data Provisioning ODATA Client

Visualbi.com blog – extracting-delta-data-ecc-extractors-using-odata/

AWS Datalakes for SAP: 2.2 SAP ODP Extraction – Beyond infrastructure for SAP Workloadshttps://www.saponaws.aworkshop.io/300-datalakes/300-sap-odp.html

SAP Help: ODP-Based Data Extraction via OData

https://answers.sap.com/questions/13433928/odata-gateway-service-with-multiple-delta-enabled.html