With flexible working being the norm in most enterprises, the ability to allocate work spaces efficiently has become a business critical process. This article covers three advanced features of Microsoft Bookings (part of Office 365) which will help you to make the best use of the desks you have available for your staff.

- Use Microsoft Bookings as an office desk booking system

- Advanced feature #1: Embed your Bookings page into a website

- Advanced featute #2: Extract Bookings data for Analytics

Use Microsoft Bookings as an Office Desk Booking System

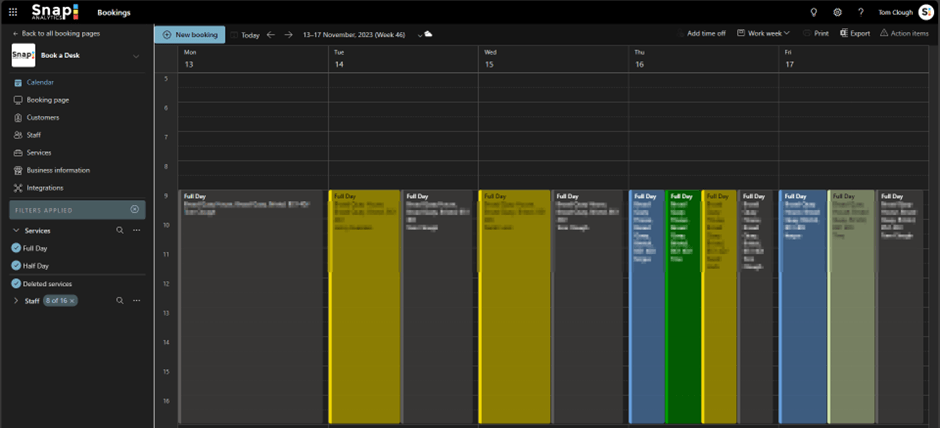

With hybrid working being offered by many companies to their workforce, offices with permanent desk allocation are becoming less common. Instead, more companies are choosing to provide hot desks to allow staff to use desks on a flexible basis whenever they come to the office. To facilitate this process, a desk booking system is recommended so that desks can be booked ahead of time and tussles over who uses what desk can be avoided. Although MS Bookings is intended for booking appointments or meetings with people, it can be also used as a handy desk (and meeting room) booking system instead if set up correctly. The steps to configure this can be found in this existing blog.

At Snap Analytics, MS Bookings is used as our hot desk booking system. Overall it works very well, although there are some minor limitations which hopefully will be resolved in due time by Microsoft: It is not yet possible to book multiple days in one go. Neither does the booking page automatically populate the ‘name’ and ‘email address’ fields, even though it requires you to log in with a Microsoft account. If you can live with these quirks, then this tool should suit your needs.

Advanced feature #1: Embed your Bookings Page into a Website

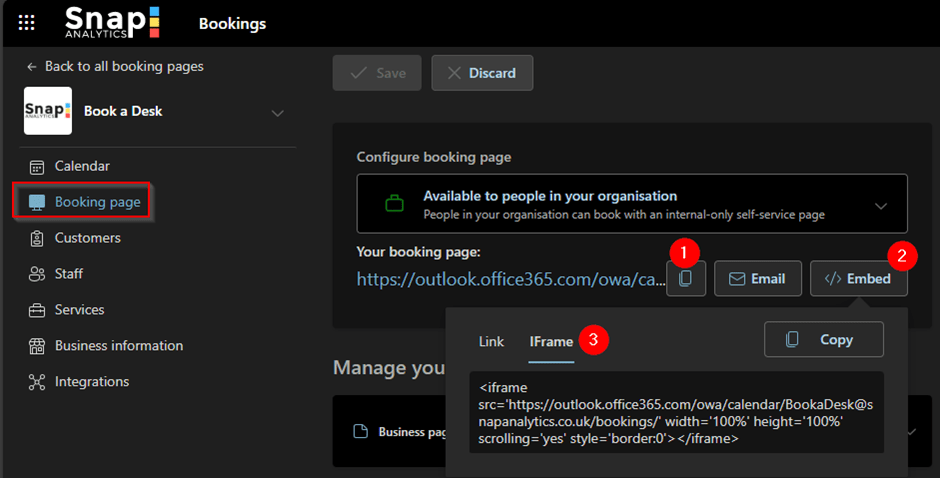

Each Bookings system comes with its own booking page, the link (1 below) to which can be found in the ‘Booking page’ area in the navigation bar which you can share with the users of your Bookings system. However, if you have a website where customers look to book appointments or a site used by staff for internal resources (e.g. SharePoint), embedding your booking page into that site can reduce the number of clicks required for customers or staff to create a booking. The Link or IFrame code for embedding can be found in the ‘Booking page’ area in the navigation bar (2 & 3 below).

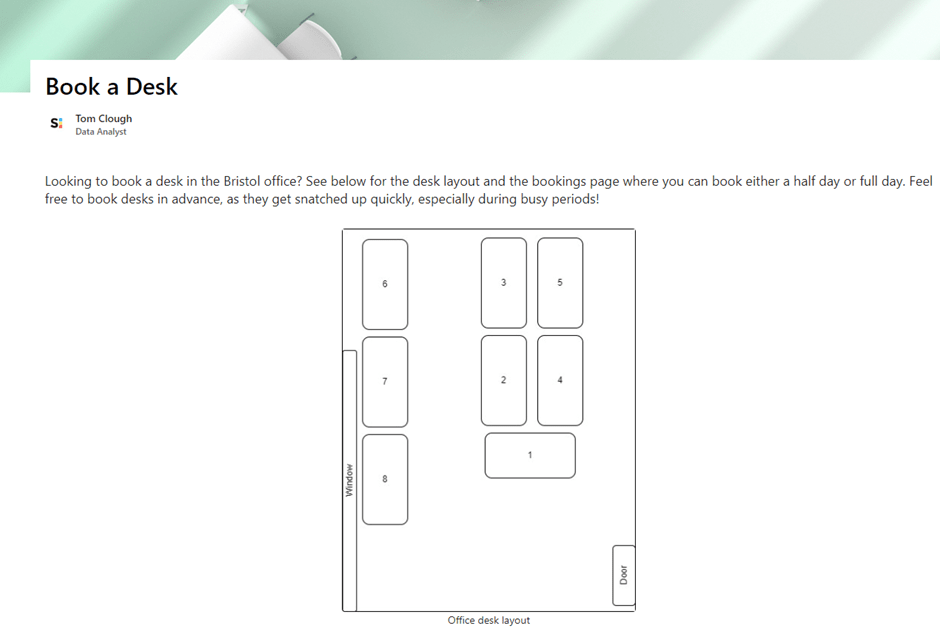

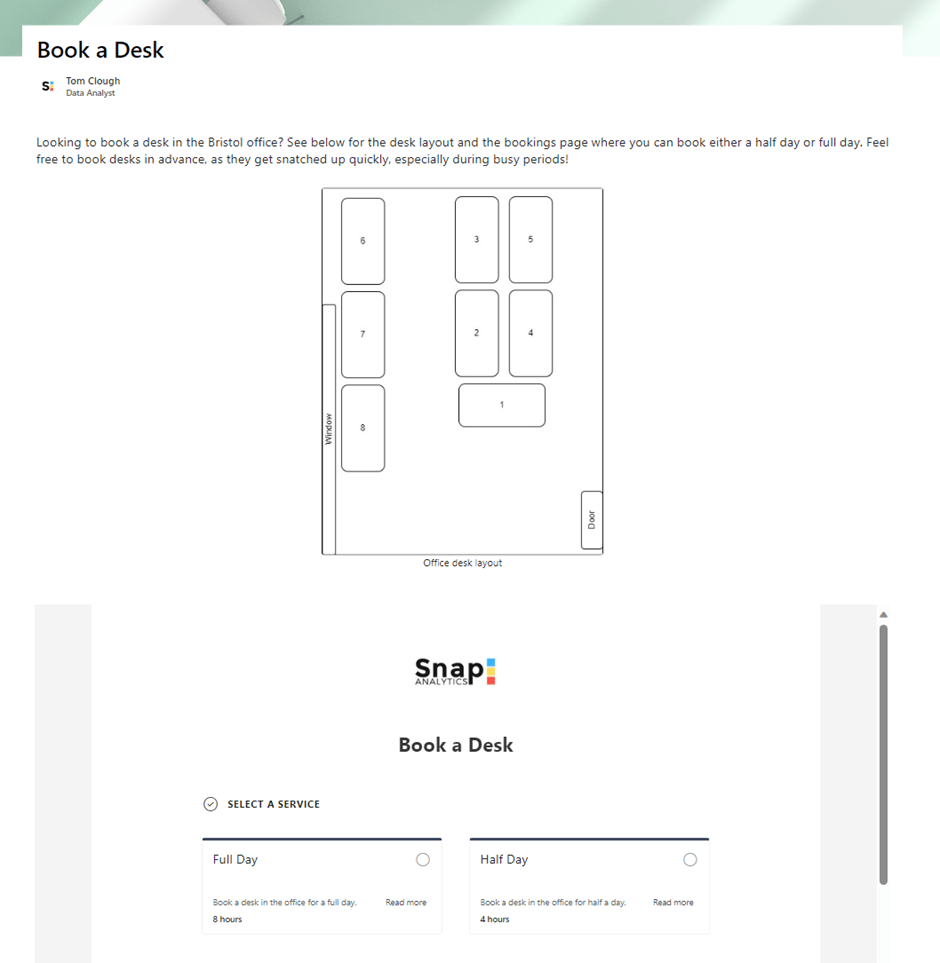

As we use the MS Bookings for a hot desk booking system at Snap, we have created a SharePoint site to hold an office desk layout diagram and then embedded the Bookings page below it. To do this, create new SharePoint page, add a blurb so users know what it is for and optionally create a diagram with the desk layout of your office.

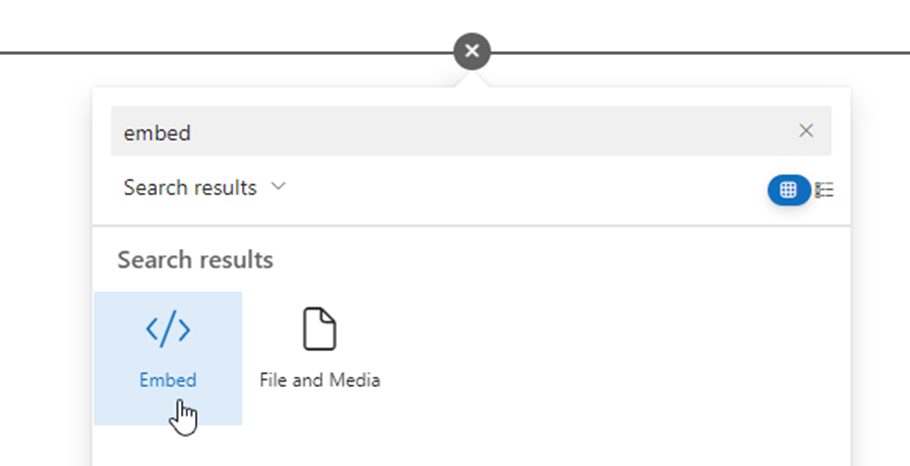

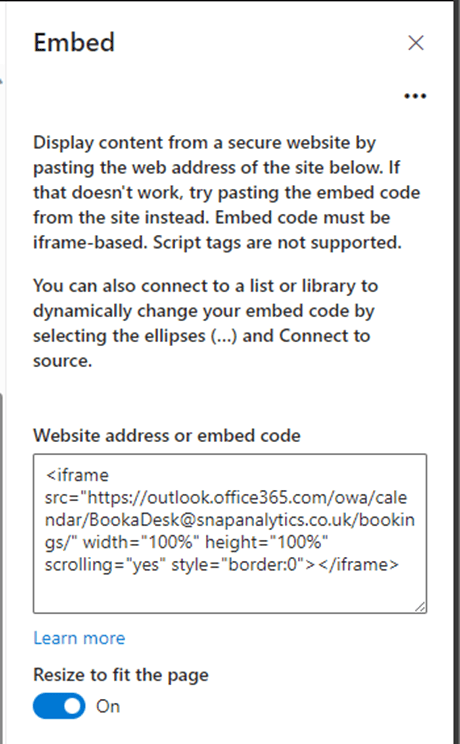

Below that, add a new section with the ‘Embed’ widget.

In the ‘Embed’ widget settings, paste the IFrame code we retrieved above.

Once you republish your page, you now have one centralised page to share with users of the Bookings system.

Advanced feature #2: Extracting Bookings Data for Analytics

If you would like to build a dashboard on top of your Bookings system to analyse your bookings data, this can be achieved using the MS Bookings Power Automate connectors. If this is something you are interested in, I highly suggest setting this up before deploying the Bookings system to users because the connector is triggered by bookings and does not extract historic booking data.

There are three Power Automate MS Bookings connectors: When an appointment is created, updated or cancelled. We will create a Power Automate flow for each of these connectors so that we can capture all Bookings actions.

1. Create a new Automated cloud flow

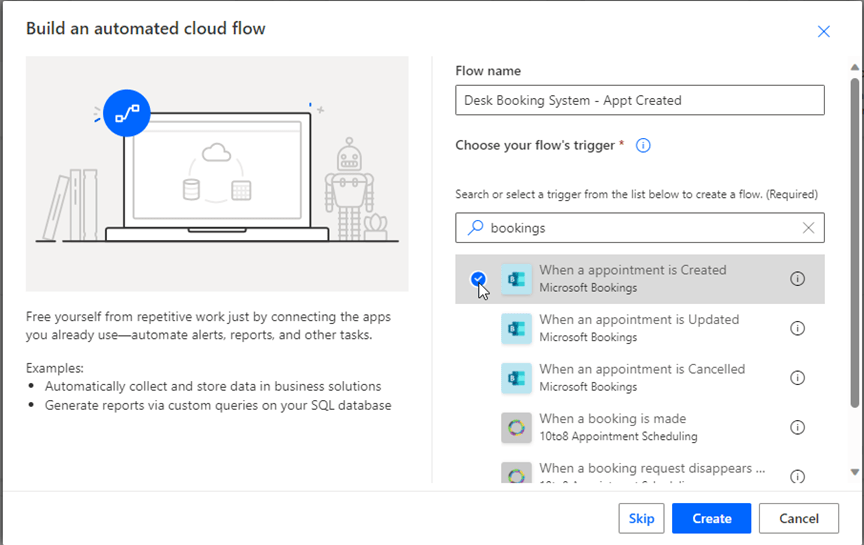

2. Name your flow and select the ‘When a appointment is Created’ trigger (hopefully they correct the grammar issue in the name!). Select ‘Create’.

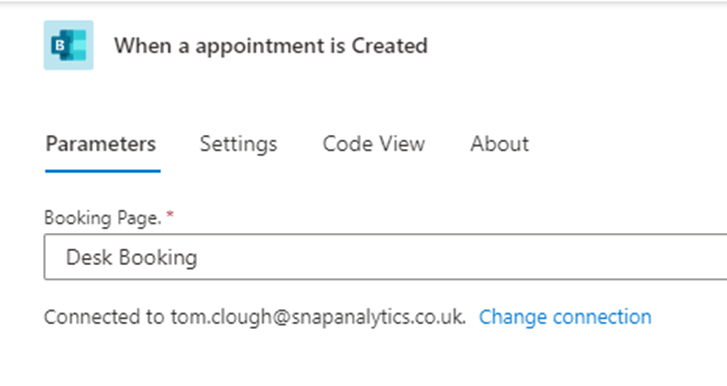

3. You will be taken to a canvas with a Booking trigger on. Click it and select your Bookings page.

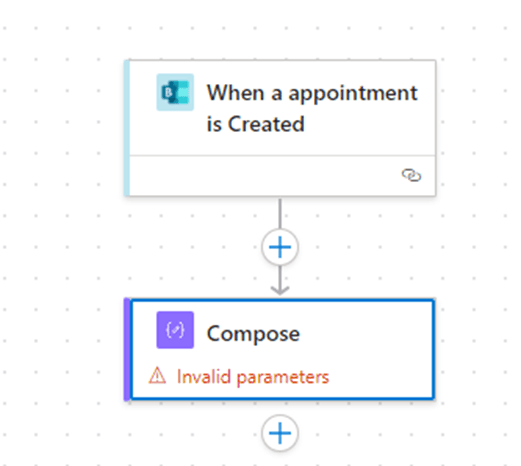

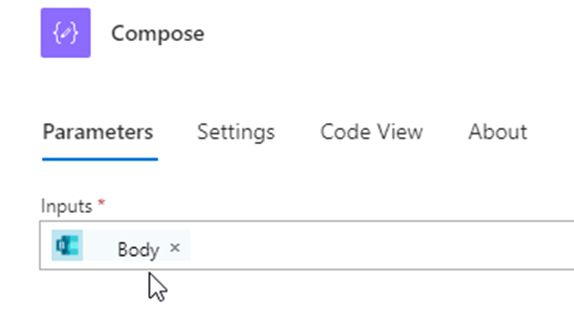

4. Add a new ‘Compose’ action flowing from the Booking Trigger.

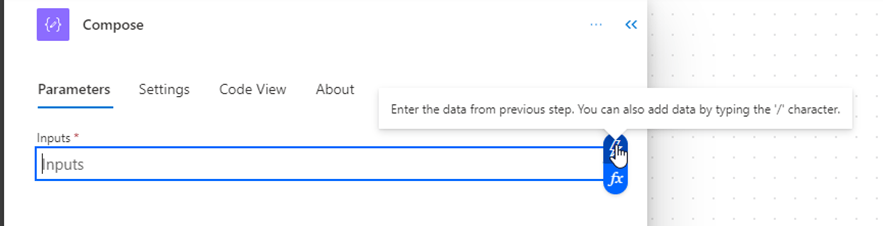

5. Select the Compose action, click the Inputs box and click the lightening icon to bring up a window to reference data from a previous step.

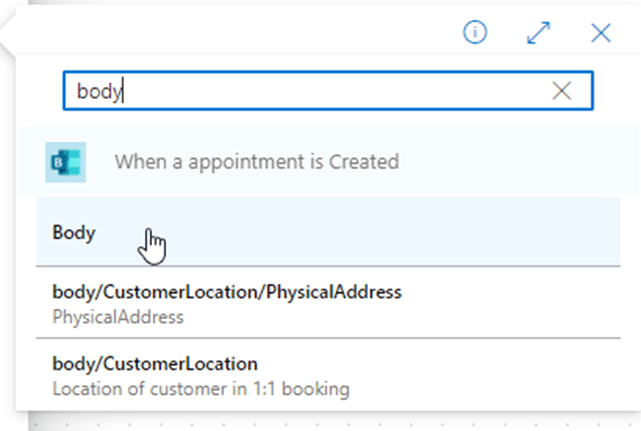

6. Search and select ‘Body’.

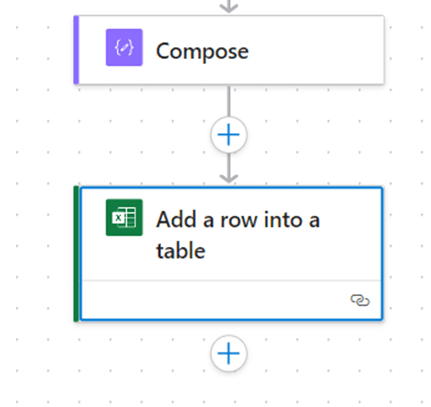

7. Add a new action to ‘Add a row into a table’.

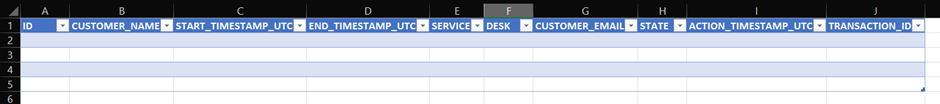

8. Before we can configure this action, a target Excel sheet will need to be created in a OneDrive or SharePoint location

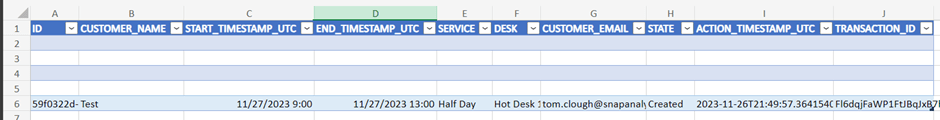

Although SharePoint is recommended as it is in a shared location. Create this somewhere where your MS account will have access as the Power Automate will use the flow creator’s credentials. In the sheet, create a table to hold the Bookings data. In our case, we are using it for hot desk bookings. If you are too, then use the column headers shown below. Otherwise, you can customise them to better represent your data. Ensure that you flag it as a table in Excel by highlighting the column headers and going to the ‘Insert’ ribbon menu and then clicking ‘Table’.

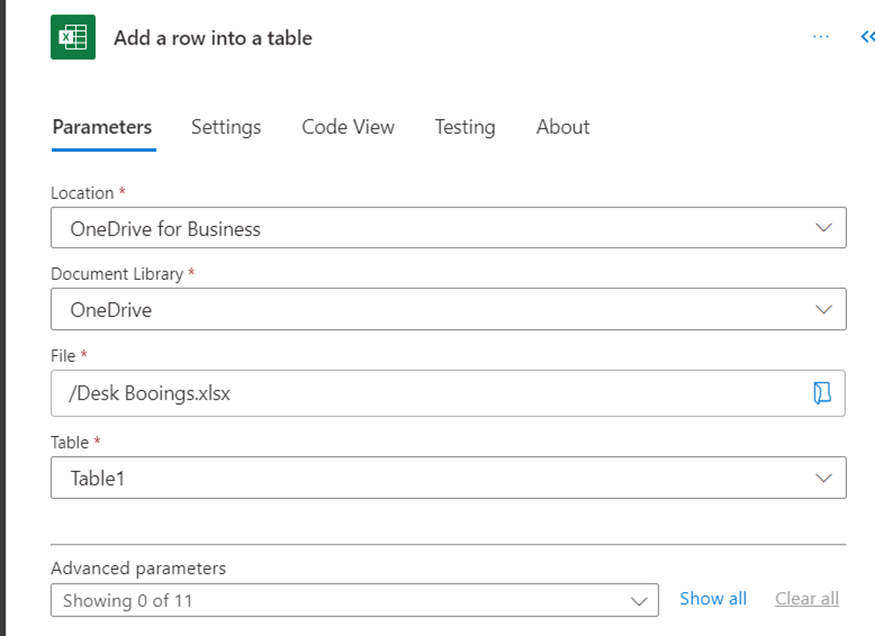

9. Now in the ‘Add row into table’ action, search for your newly created Excel sheet and link to the table.

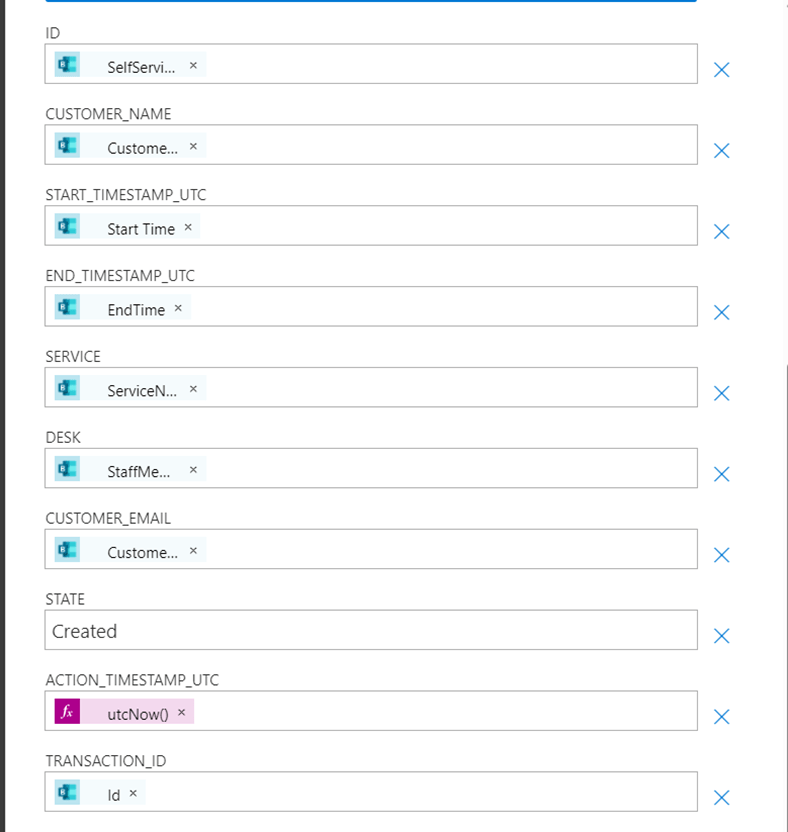

10. Click ‘Show all’ at the bottom of the ‘Parameters’ page. For each column, select the lightning bolt at the right of the field and fill in the following:

| Column | Power Automate Parameter |

| ID | SelfServiceAppointmentId |

| CUSTOMER_NAME | CustomerName |

| START_TIMESTAMP_UTC | Start Time |

| END_TIMESTAMP_UTC | End Time |

| SERVICE | ServiceName |

| DESK | StaffMembers DisplayName |

| CUSTOMER_EMAIL | CustomerEmail |

| STATE | ‘Created’ (as a fixed string) |

| ACTION_TIMESTAMP_UTC | utcNow() note: this is found in the functions list  |

| TRANSACTION_ID | Id |

Which should come out looking like this:

11. Save the Power Automate flow

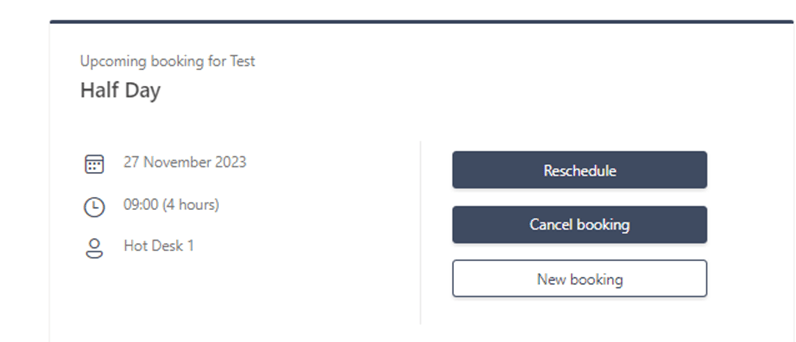

Next, we will test it to make sure it works. Click the ‘Test’ button in the top right, select ‘Manually’ and then ‘Test’. Now go and create a booking via the booking page.

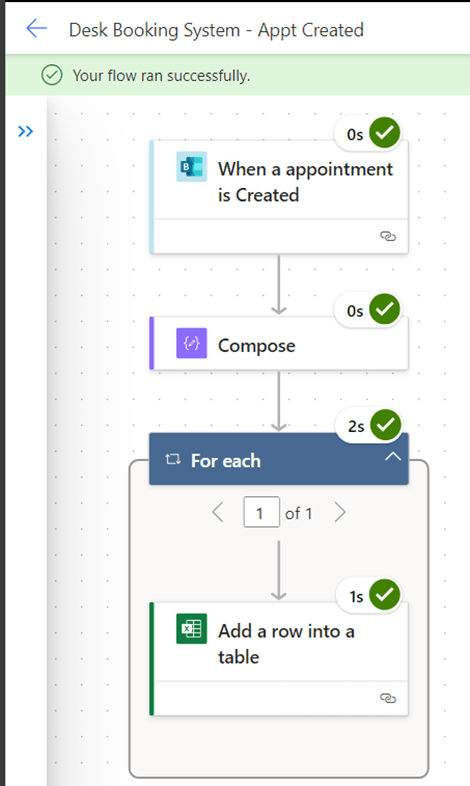

Once that is done, you should see a successful test in Power Automate:

You should also see a new row in your Excel with the details of your booking (feel free to delete any empty rows at the top of the table):

12. Lastly, repeat the above steps in two new Power Automate flows for the ‘Updated’ and ‘Cancelled’ Bookings triggers

The only difference should be the trigger, and the ‘STATE’ column string which should be set to ‘Updated’ and ‘Cancelled’ depending on the trigger. Make sure to point them to the same Excel sheet.

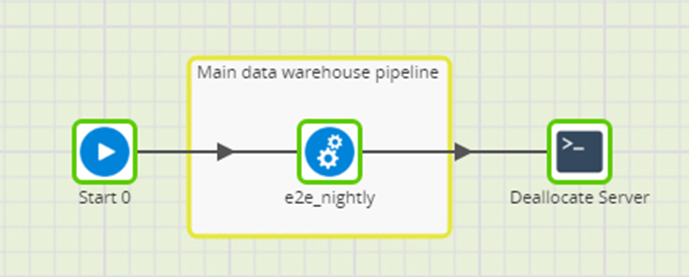

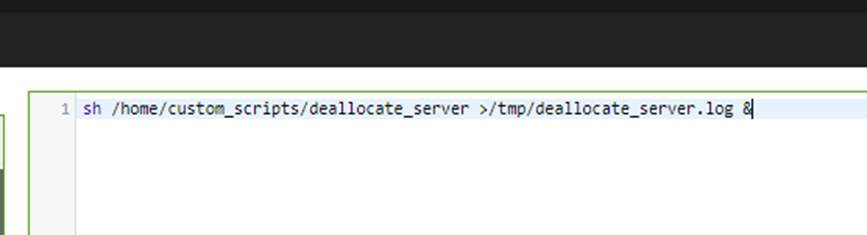

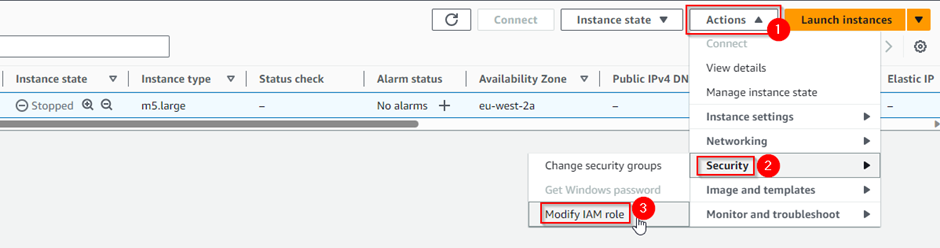

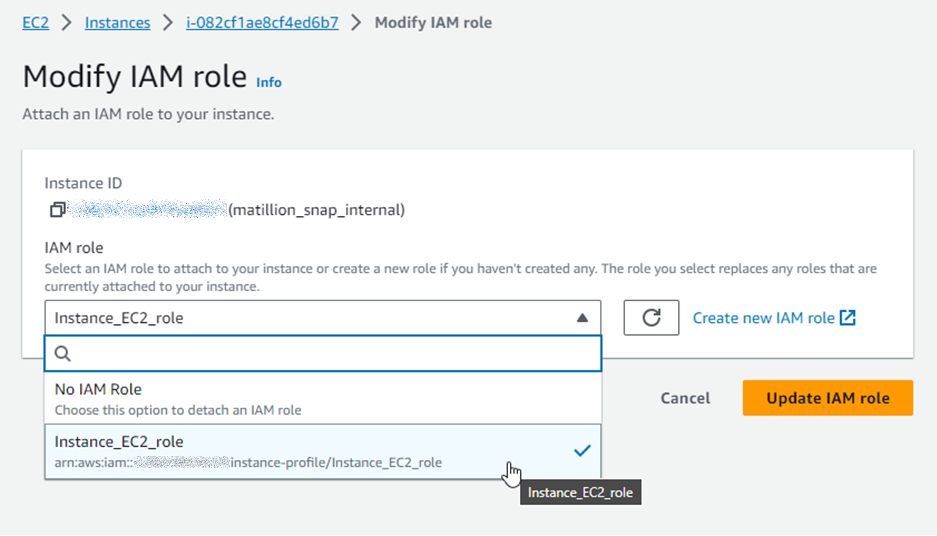

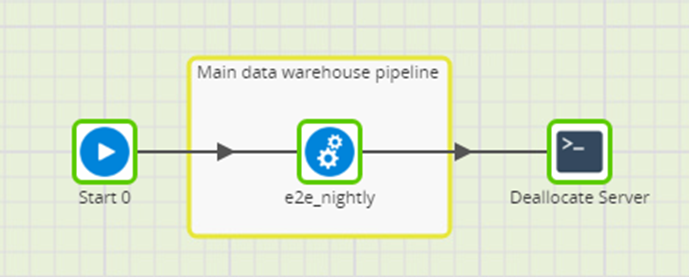

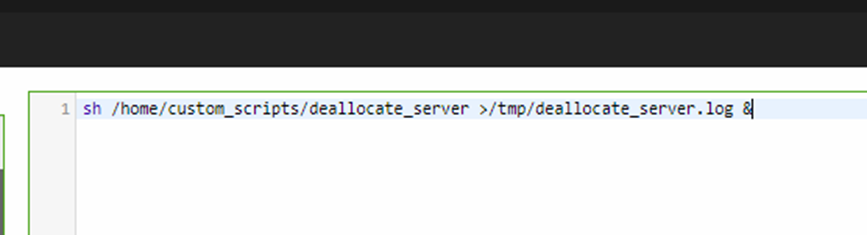

This Excel sheet can now be ingested into a Data Warehouse and reported on using a Data Visualisation tool such as Power BI. At Snap, we use Matillion to load this into our Snowflake account where we have a star schema with dimensional tables adding master data to enrich the Bookings data such as employee attributes. We then have a simple Snowflake Dashboard to visualise the data.

Final thoughts

Hopefully you found these advanced features of MS Bookings useful. We have implemented all three of these internally at Snap and have benefited greatly from using MS Bookings as a hot desk booking system, embedding it into our SharePoint site, and gathering analytics data on hot desk usage such as which days and desks are most popular. Please feel free to reach out to me on LinkedIn or drop a comment on this blog if you have any further questions.