SAP has rocked the boat. They have issued an SAP note (3255746), declaring a popular method for moving data from SAP into analytics platforms out of bounds for customers. Customers and software vendors are concerned. They have to ensure they operate within the terms & conditions of the license agreement with SAP. It seems unfair that SAP unilaterally changes these Ts and Cs after organisations have purchased their product. I will refrain from giving legal advice but my understanding is that SAP notes are not legally binding. I imagine the legal teams will have a field day trying to work this all out. In this article I will explain the context and consequences of this SAP note. I will also get my crystal ball out and try and predict SAPs next move, as well as giving you some further considerations which perhaps help you decide how to move forward.

What exactly have SAP done this time?

SAP first published note 3255746 in 2022. In the note, SAP explained that 3rd parties (customers, product vendors) could use SAP APIs for the Operational Data Provisioning (ODP) framework but these APIs were not supported. The APIs were meant for internal use. As such, SAP reserved the right to change the behaviour and/or remove these APIs altogether. Recently, SAP have updated the note (version 4). Out of the blue, SAP declared it is no longer permitted to use the APIs for ODP. For good measure, SAP threatens to restrict and audit the unpermitted use of this feature. With a history of court cases decided in SAPs favour over license breaches, it is no wonder that customers and software vendors get a bit nervous. So, let’s look at the wider context. What is this ODP framework and what does it actually mean for customers and product vendors?

SAP ODP – making the job of getting data out of SAP somewhat less painful

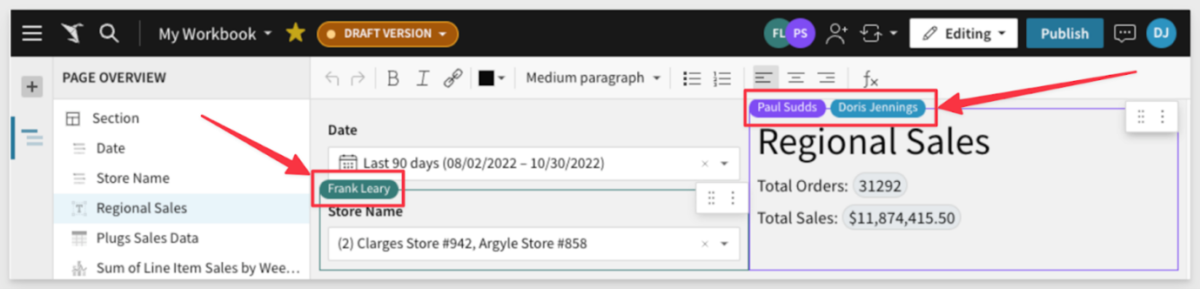

Getting data out of SAP is never easy, but ODP offered very useful features to take away some of the burden. It enabled external data consumers to subscribe to datasets. Instead of receiving difficult to decipher raw data, these data sets would contain data which was already modelled for analytical consumption. Moreover, the ODP framework supports ‘delta enabled’ data sets, which significantly reduces the volumes of data to refresh on a day-to-day basis. When the ODP framework was released (around 2011(1)), 3rd party data integration platforms were quick to provide a designated SAP ODP connector. Vendors like Informatica, Talend, Theobald and Qlik have had an ODP connector for many years. Recently Azure Data Factory and Matillion released their connector as well. SAP also offered a connection to the ODP framework through the open data protocol OData. This means you can easily build your own interface if the platform of your choice does not have an ODP plug-in.

One can imagine that software vendors are not best pleased with SAP’s decision to no longer permit the use of the ODP framework by 3rd parties. Although all platforms mentioned above have other types of SAP connectors(2), the ODP connector has been the go-to solution for many years. The fact that this solution was not officially supported by SAP has never really scared the software vendors. ODP was and remains to be deeply integrated in SAP’s own technology stack and the chances that SAP will change the architecture in current product versions are next to zero.

Predicting SAP’s next move

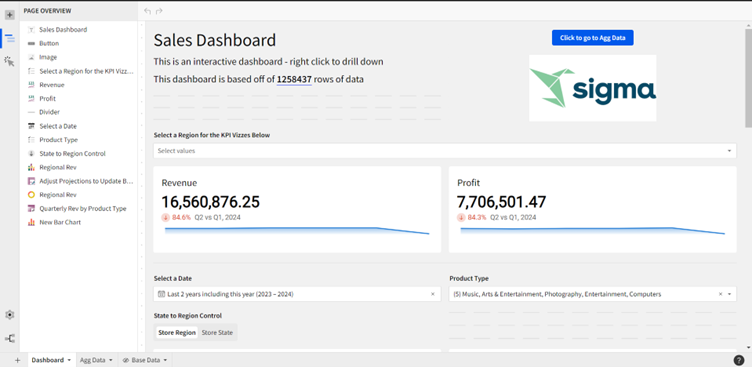

You might wonder why SAP is doing this? Well, in recent years, customers have voted with their feet and moved SAP data to more modern, flexible and open data & analytics platforms. There is no lack of competition. AWS, Google, Microsoft, Snowflake and a handful of other contenders all offer cost effective data platforms, with limitless scalability. On these data platforms, you are free to use the data and analytics tools of your choice, or take the data out to wherever you please without additional costs. SAP also has a data & analytics platform but this is well behind the curve. There are two SAP products to consider, SAP Analytics Cloud (SAC) and SAP DataSphere.

The first is a planning and analytics toolset for business users and was introduced in 2015. For a long time, it was largely ignored. In recent years, it has come to maturity and should now be considered a serious contender to PowerBI, Tableau, Qlik and so on. I’m not going to do a full-blown comparison here but the fact that SAC has integrated planning capabilities is a killer feature.

SAP DataSphere is a different story. It is relatively new (introduced as SAP Data Warehouse Cloud in 2020) – and seasoned SAP professionals know what to do with new products: If you’re curious you can do a PoC or innovation project. If not, or you don’t have the time or means for this kind of experimenting, you just sit and wait until the problems are flushed out. SAP DataSphere is likely to suffer from teething problems for a bit longer, and it will take time before it is as feature-rich as the main competitor data platforms. One of the critical features which was missing until very recently was the ability to offload data to cloud storage (S3/Blob/buckets, depending on your cloud provider). That feature was added in Feb 2024. Around the same time as when SAP decided that 3rd parties could no longer use the ODP interface to achieve exactly the same. Coincidence?

So where is SAP going with this? Clearly they want all their customers to embrace SAP DataSphere. SAP charges for storage and compute so of course they try and contain as many workloads and as much data as they can on their platform. This is not different from the other platform providers. What is different is that SAP deliberately puts up barriers to take the data out, where other providers let you take your data wherever you want. SAP’s competitors know they offer a great service at a very competitive price. It seems SAP doesn’t want to compete on price or service, but chooses to put up a legal barrier to keep the customer’s data on their platform.

SAP Certification for 3rd party ETL tools no longer available

Blocking the use of ODP by 3rd party applications is only the beginning. SAP has already announced it will no longer certify 3rd party ETL tools for the SAP platform(3). The out-and-out SAP specialists have invested heavily in creating bolt-on features on the SAP platform to replicate large SAP data sets efficiently, often in near real-time. The likes of Fivetran, SNP Glue and Theobald have all introduced their own innovative (proprietary) code purely for this function. SAP used to certify this code, but has now stopped doing so. Again, the legal position is unclear and perhaps SAP will do a complete u-turn on this, but for now it leaves these vendors wondering what the future will be for their SAP data integration products.

What do you need to do if you use ODP now through a 3rd party application?

My advice is to start with involving your legal team. In my opinion an SAP note is not legally binding like terms & conditions are, but I appreciate my opinion in legal matters doesn’t count for much.

If you are planning to stay on your current product version for the foreseeable future and you have no contract negotiations with SAP coming up then you can carry on as normal. When you are planning to move to a new product version though, or if your contract with SAP is up for renewal, it would be good to familiarise yourself with alternatives.

As I mentioned before, most 3rd party products have multiple ways of connecting to SAP, so it would be good to understand what the impact is if you had to start using a different method.

It also makes sense to stay up-to-date with the SAP DataSphere roadmap. When I put my rose-tinted glasses on, I can see a future where SAP provides an easy way to replicate SAP data to the cloud storage of your choice, in near-real time, in a cost effective way. Most customers wouldn’t mind paying a reasonable price for this. I expect SAP and its customers might have a very different expectation of what that reasonable price is but until the solution is there, there is no point speculating. If you are looking for some inspiration to find the best way forward for you, come talk to Snap Analytics. Getting data out of SAP is our core business and I am sure we can help you find a futureproof, cost effective way for you.

Footnotes and references

(1) – The ODP framework version 1.0 was released around 2011, specifically with SAP NetWeaver 7.0 SPS24, 7.01 SPS 09, 7.02 SPS 08. The current version of ODP is 2.0, which was released in 2014 with SAP Netweaver 7.3 SPS 08, 7.31 SPS 05, 7.4 SPS 02. See notes 1521883 and 1931427 respectively.

(2) – Other types of SAP connections: One of my previous blog posts discusses the various ways of getting data out of SAP in some detail: Need to get data out of SAP and into your cloud data platform? Here are your options

(3) – Further restrictions for partners on providing solutions to get data out of SAP, see this article: Guidance for Partners on certifying their data integration offerings with SAP Solutions