With the rise of cloud data warehouses, it has become increasingly important to have the ability to easily move data from SAP to other platforms. There are plenty software vendors promising ‘easy integration with SAP’ but the reality is that getting data out of SAP at enterprise scale is all but easy. And what works in one place, might not be the best solution somewhere else. No two SAP systems are the same and the data and analytics requirements vary between different organisations. A great solution for one might be too expensive, too complex or not fit for purpose somewhere else.

To understand the options for getting data out of SAP it is important to understand three key concepts:

I. Connection types and communication protocols

II. Types of SAP data sources

III. Data Integration patterns

In this blog post, I will explain the SAP specific considerations for these three key topics. This article is specifically written with data & analytics in mind for data coming from the SAP ABAP Platform. This platform is used for SAP S/4HANA, older versions of SAP ERP and a whole range of other SAP applications. It is good to be aware there are also SAP applications not running on the SAP ABAP Platform (for example SuccessFactors, Cloud for Customer and Concur) for which this article is not relevant.

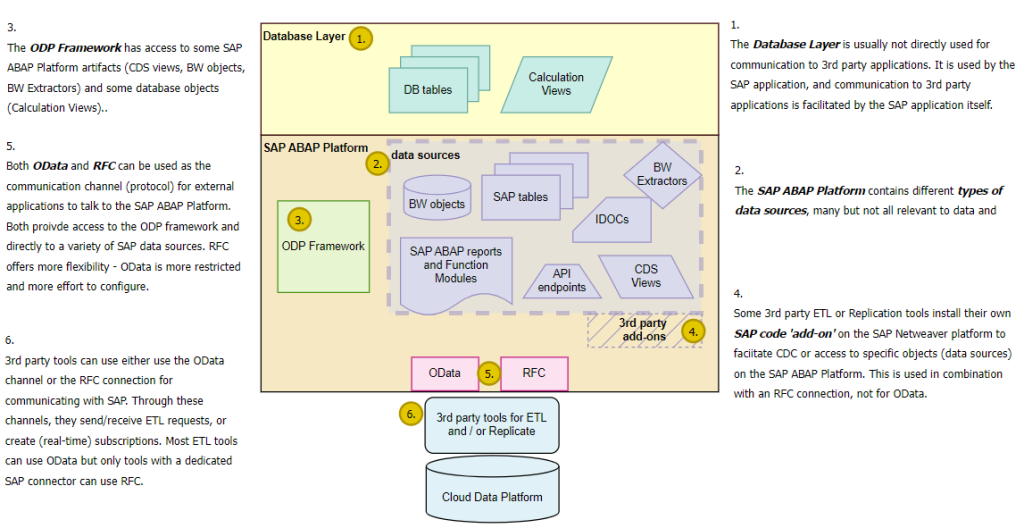

Concept I: Connection types – OData and RFC

There are two ways of connecting to an SAP system for data & analytics purposes: OData and SAP Remote Function Call (RFC). The OData protocol is an open protocol which is widely used for querying REST APIs.

RFC is a SAP proprietary protocol and has been around a lot longer than OData. Most SAP applications use RFC to communicate with each other. SAP is heavily biased towards RFC – many features and functions available for RFC are simply not available in the OData context.

I have left out ODBC as it is not considered good practice to use this with SAP systems for data integration purposes.

Concept II: Types of SAP data sources

An SAP system is not just a database with tables and fields, but a complex applications with different types of artifacts. Below is an overview of the types of data sources on the SAP ABAP platform relevant for data & analytics.

Data source type 1: Tables, using direct table extraction

You can get data from any table in the SAP system either through the SAP Application layer or by querying the underlying database (license restrictions may apply). SAP has 100,000s of tables. Many of those have 4 character abbreviations and 6 character field codes so it can be challenging to find the right tables and links between tables. If you are comfortable with the SAP language and you know your VBAP from your VBAK then this might work for you, but in general this is not seen as good practice for an enterprise-scale data platform solution.

Data source type 2: SAP Operational Data Provisioning framework (ODP)

ODP is not just a single data source but a complete framework where external providers can subscribe to datasets. These datasets typically have more meta-data than database tables, and can contain useful links between datasets. The ODP framework provides a single interface to a variety of data types, including:

- SAP BW extractors (or S-API extractors)

- SAP CDS views

- HANA Calculation views

- SAP BW objects (queries, InfoProviders)

Data source type 3: API Endpoints

Most of the SAP APIs are designed for operational processes not suitable for data & analytics use cases. The exception is when creating an endpoint for the ODP framework. Be aware that not all ODP features are available in API endpoints.

Other data source types (IDOCs / Reports and Function modules / Queries)

There are various other ways of ‘getting data out of SAP’ but there are limited use cases within the domain of data & analytics for these.

Concept III: Data integration patterns

Within the context of data & analytics, the main processes for data ingestion are:

- Extract, Transform and Load (ETL) processing

- Change Data Capture (CDC) and data replication

- Event streaming / messaging

Each of these topics could easily fill a blog post on their own – or a complete book for that matter, I will try to give an overview of these frameworks in the next paragraphs, restricting the scope to data & analytics for SAP data.

Date Integration pattern 1: Extract, Transform and Load (ETL)

I assume most people reading this are familiar with ETL. The downside of ETL is that there is usually a significant delay between data changes made in SAP and these changes flowing through to the data platform. Technological advances have resulted in shorter batch windows and data refreshes during the day. In exceptional cases, smaller datasets can be refreshed every ten minutes or so, and medium data sets perhaps every hour. The vast majority of the data is still only refreshed once per day.

The benefit of ETL is that it is relatively cheap to implement and maintain. ETL also prepares the data for analytical consumption, by cleansing, unifying and linking data to other data sets in the organisation.

Data Integration pattern 2: Change Data Capture (CDC) and data replication

Change Data Capture is a mechanism which captures every change made to data in an application or database. The CDC log is then used to replicate the changes in a remote (target) database or application. For SAP, there are various mechanisms available, either based on the database (through triggers or database log) or added as a ‘bolt-on’ by third-party applications (such as Fivetran HVR, Qlik Replicate or SNP Glue). SAP itself also offers add-ons, specifically SAP LT and SAP Replication Server.

CDC and data replication are beautiful tools to get SAP data into a target system in (near) real time. Data replication for SAP data is more expensive than ETL. It requires a bigger investment (for both hardware and software) and higher costs to run (the applications are usually always on, where ETL applications can be switched off during the day). The data is also replicated at a low technical level, usually table/field level. This means that the data still needs to be transformed after it is ingested into the data platform. The benefit though is that high-volume SAP data can be acquired in real-time, at enterprise scale.

Data Integration pattern 3: Event streaming

Event streaming is not at all common in the context of SAP data and analytical platforms. Outside the world of SAP, event streaming is widely used. The most popular framework is the open source Apache Kafka framework (see how it works here) but other frameworks are available. Event streaming in SAP is delivered in partnership with

SAP have partnered with Solace to provide event streaming (see SAP help here) and it has recently started to gain some traction. Other trending topics like Data Mesh and Data Fabric need event-streaming capabilities to work successfully. So although event streaming is not widely used in data warehousing, it might become more relevant when the ability to stream high volume data from complex sources in real time.

Now that we have discussed the types of SAP data sources, data integration processes and communication protocols it is time to bring it together in one picture. Below is an overview of how the different concepts work together to ‘get data out of SAP’.

I hope this helps with understanding the options for ‘getting data out of SAP’. This article is only an introduction and if you want to define a strategy or select a tool for your organisation you will have lots more questions. Please feel free to ask your question here, and I will try and answer!