Matillion has always been clear on its ambition to become the leading ‘data productivity cloud’ provider. It forms the backbone of many data warehouses for global enterprises. Matillion offers user-friendly low-code ETL capabilities and provides an open and flexible environment. This makes it a popular choice for small and medium enterprises, and for supporting departmental requirements in larger enterprises. To become the data integrator of choice for global data warehouse implementations, there was one challenge left: The ability to integrate SAP data at scale. With the introduction of the SAP ODP connector, Matillion now ticks this box. Releasing the Anaplan connector is the cherry on top.

Snap Analytics have worked very closely with Matillion on the development of the new SAP connector. I had the pleasure of working with the product development team and been involved with early testing. As part of the ‘Private Preview’ programme for the Anaplan connector, I ran a PoC for a global enterprise. I mention this here to make it clear that I am not a ‘neutral observer’. But then, one rarely is. The views I express here are my own and I have not been paid for this article.

The new way of connecting Matillion to SAP: The SAP ODP Connector

SAP ODP stands for Operational Data Provisioning. It’s SAP way of providing data to 3rd party data consumers, with rich metadata and context. There’s plenty of information on the internet about ODP but I’ll just refer you to one of my previous blog posts if you want to read up on this. The Matillion ODP connector uses a native SAP RFC connection to connect to ODP. The key benefits of using the Matillion SAP ODP connector for getting data out of SAP onto the data cloud are:

- – There is no configuration required on the SAP side . You can immediately use all existing ODP data sources. This includes (but is not limited to) data extraction enabled CDS views, HANA Calculation Views and the SAP ‘BW’ extractors or S-API extractors)

- – SAP handles deltas for delta-enabled data sources

- – The data source includes metadata and is provided in context of a business transaction, instead of a technical view of the data

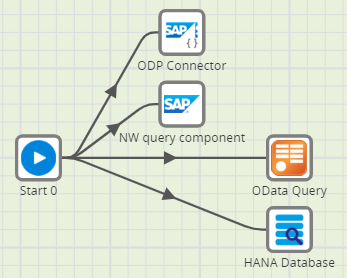

There are still other connectors available in Matillion to connect to SAP. Are they still relevant?

The SAP Netweaver Query connector is still useful to quickly connect to tables if there is no ODP datasource available. When getting the data this way you will get the raw table- and field names so you will have to do more work to prepare the data for consumption.

The generic database query connector lets you get data out of SAP in the same format as the Netweaver Query connector. You do need an enterprise license for the database underpinning your SAP system. The price tag for this is prohibitive for most customers.

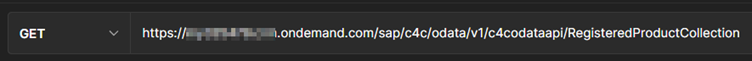

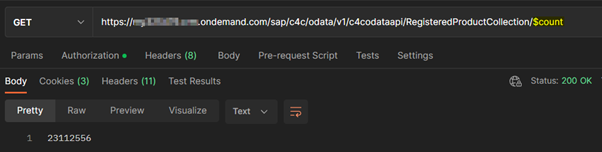

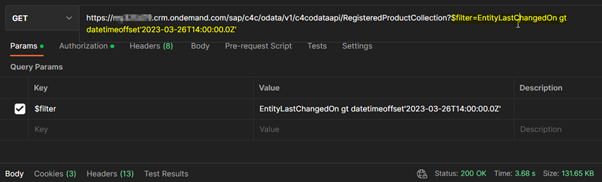

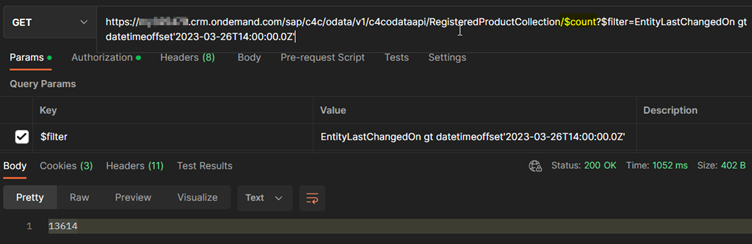

In recent years, Snap Analytics have implemented several cloud data warehouse solutions for our customers using the Matillion OData component to get data out of SAP. This also leverages the ODP framework so it is a reasonable solution. It does require SAP configuration (NetWeaver Gateway). The OData connections seems less performant compared to RFC connections. As the ODP Connector has access to the same data sources as the OData connection (within the ODP framework) there is no use case anymore for the OData connector.

Screenshot of a Matillion job with four connection types to SAP. Use the ones with the SAP logo for the best experience.

Can the ODP connector cope with high volumes and near-real time requirements?

There are inherit limitations in the ODP framework. SAP did not design this framework with high volume/low latency scenarios in mind. Some vendors have developed proprietary code they deploy directly on the SAP system to replicate high volumes of SAP data in near-real time to a target environment. Products like SNP Glue, Theobald and Fivetran probably beat the ODP framework in terms of throughput performance. Whether or not you need these SAP specialist tools very much depends on your business requirements and data strategy. Bear in mind that you would still need a tool for data transformation. In many cases, adding an SAP specialist tool would increase the complexity of the overall solution.

Where does Anaplan fit in?

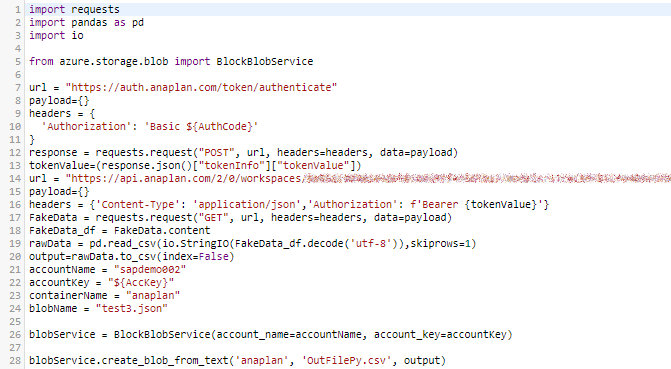

With Matillion it has always been easy to connect to a wide variety of data sources. Unfortunately, up to now, there was no predefined connector for Anaplan. Many enterprises use Anaplan as the planning application for ‘SAP’ data. You could get data out of Anaplan using Matillion, buy you had to create your own Python code for it and this was rather cumbersome.

Code snippet – connecting to Anaplan before Matillion had a standard connector.

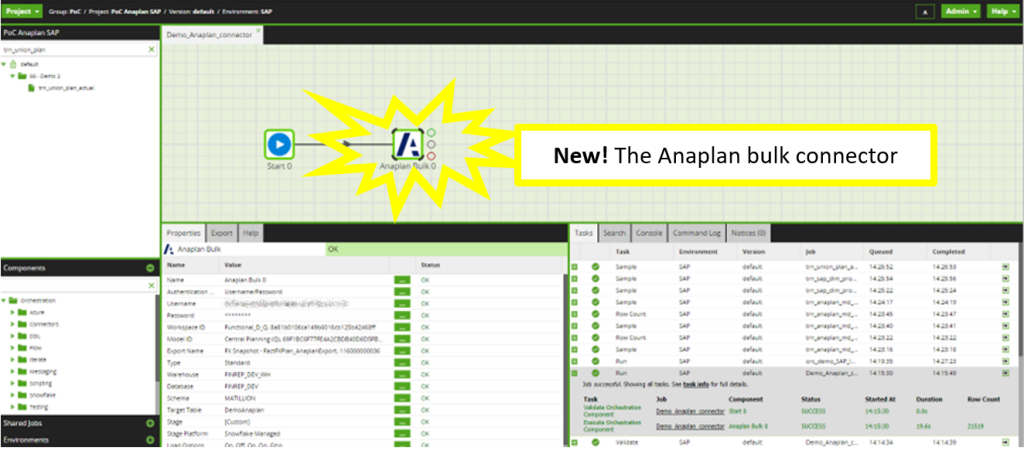

Now, all you need is your Anaplan username and password (or Oauth token) and you can simply navigate to your view or export dataset using the dropdown options for the Anaplan Workspace/Model and so on.

Hooray – the Anaplan connector is here – simply use the drop-down menus to connect to your Export or View.

So these new connectors, why do they matter?

Business users need easy access to all relevant data in the business, regardless of where the data originates. Too often, it takes too long to connect a new source system to a data platform and business users will create a shadow IT solution instead. Connecting standard business applications to a data platform should be plug and play. With Matillion’s standard connectors this often is the case (there are nearly 100 connectors last time I counted). SAP and Anaplan are business critical applications for many enterprises and with these new connectors Matillion can now truly become the leading ‘data cloud provider’.

Useful links

Unleash the Power of SAP Data With Matillion’s SAP ODP Connector

Anaplan Connector for Matillion: Next-Level Forecasts and Planning

4 ways to connect to SAP to Matillion

Credit: Title image by Freepik