This blog follows on from the ‘How to Automatically Shut Down an AWS Matillion Instance After a Schedule Finishes‘ blog but instead provides the steps relevant for Azure rather than AWS.

I would strongly recommend reading the introduction and The “Death Loop” Issue sections in that blog before proceeding with the below steps. Fortunately, configuring this for Azure is simpler than AWS due to Azure giving instances managed identities by default. Unlike Azure, AWS requires the instance be granted a custom role with a policy to allow it to turn itself off.

Step 1: Installing the Azure CLI

The Azure CLI is a powerful tool for interacting with the Azure Cloud Platform in various ways. Here, we will use a simple CLI command to deallocate an Azure VM. To begin, you will need to install the Azure CLI on the Matillion VM, which can be done by following this installation guide by Microsoft.

Step 2: Creating a Deallocate Bash Script

Create a file with the below script in the following directory:

/home/custom_scripts/deallocate_serverby SSHing into the VM and ensure that the centos user owns the file.

sleep 30

az login --identity

az vm deallocate --resource-group <MY_RESOURCE_GROUP> --name <MY_VM_NAME>

The first command sleeps for 30 seconds to ensure that the Matillion schedule has enough time to complete safely before the VM is deallocated. The second command authenticates with the Azure CLI with the VM’s managed identity. The final command executes the VM deallocation using the Azure CLI.

A couple of things to note:

- If you have a separate production Matillion instance, the above steps will need to be redone on that instance, and the new resource group and VM name will need to be used in the deallocate_server script.

- The VM’s Enterprise Application in Azure will need at least the ‘Desktop Virtualization Power On Off Contributor’ role on the VM. Usually, the VM will already have sufficient privileges for this.

Step 3: Implementing in Matillion

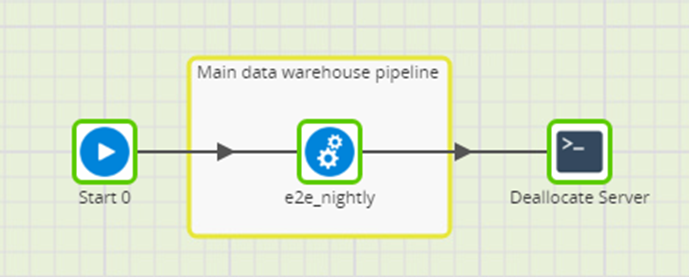

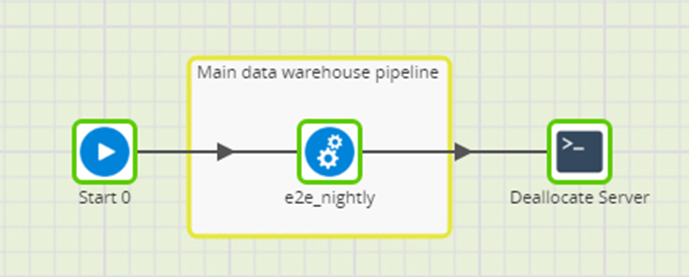

From here, we will use a Bash Script component to execute the above deallocate_server script. A wrapper job will be needed around your main pipeline where you can attach a Bash Script component to the end of the pipeline (this wrapper job will be the one run by your Matillion schedule). Important: the flow from the main pipeline (in this case e2e_nightly) will need to be unconditional (grey) so that the server is turned off regardless of whether the pipeline was successful. Otherwise, your VM will stay on in the event of a pipeline failure if the Bash Script is only set to execute when the main pipeline is successful (unless you have perfect pipelines… ?).

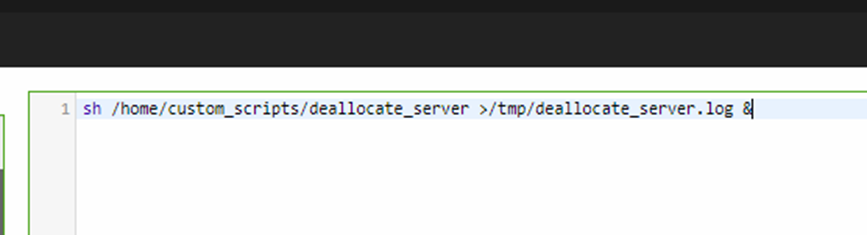

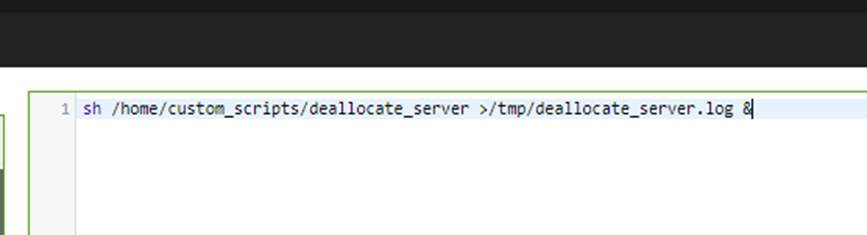

Within the Bash Script, place the below command which will execute the deallocate_server script that we created on the VM in step 2.

sh /home/custom_scripts/deallocate_server >/tmp/deallocate_server.log &

Crucially, the ampersand symbol (&) at the end of the command enables the command to be executed without waiting for the script to finish. This allows the Bash Script component to immediately flag as completed in the eyes of the Matillion task scheduler, and therefore the schedule will be marked as complete. This avoids the aforementioned “death loop” as there is no dependency on the deallocation commands completing before the Matillion schedule can finish. Additionally, the script exports the output of the deallocate command to a log file for auditing purposes.

Final thoughts

The solution proposed in this blog uses the Azure CLI to deallocate your Matillion VM by simply running a Bash Script component. It should be noted that there are a number of alternative ways to achieve this, such as using message queues to trigger a cloud function to shut down the VM, which is equally valid.

Once you have this deallocation functionality configured, you can rest assured that your Matillion VM will dynamically shut down once your schedule completes. Please feel free to reach out to me on LinkedIn or drop a comment on this blog if you have any further questions.